About

Researcher & Robotics Engineer

Currently, I am working at Magna Electronics as a Systems Engineer in the domain of Advanced Driver Assistance Systems (ADAS) features with the Functional Safety and Systems team. I received my Bachelor's of Science Degree in Robotics Engineering from the University of Michigan - Dearborn, where I am still working as a Research Assistant under an esteemed professor working towards several research projects related to automotive cybesecurity at the Information Systems, Security, and Forensics Lab with a great team of accomplished PhD students

ISSF Reserach Lab - click here for the research lab website

- Graduation: Winter 2022

- Phone: +1 313 - 394 - 9140

- Location: Michigan, USA

- Age: 24

- Degree: BSE in Robotics Engineering

- Email: dkhuttan@umich.edu

Inspiration

In middle school, I joined a Robotics Club since when my interest in Robotics has just been rising. Getting my hands dirty from building working projects from scratch was my new addiction. Since then, I have always tried building new hobby projects which solve or help a person with their day-to-day activities. I competed at International Robotics Competitions for 2 straight years during high school, once at University of Washington - Seattle (UoW - Seattle) and the other at Singapore University of Technology & Design (SUTD) where I backed up 3rd position internationally.

Exploring University of Washington made me realize that I want to move to the US to be open to more opportunities. After getting accepted at the University of Michigan - Dearborn and starting my degree in Fall 2018, I wanted to explore more into research as it requires critical thinking and problem solving skills opening a new world to hands on experience which I already loved! Working as a Research Assistant made it clear to me that I should to pursue my career as a Researcher and work my way forward towards getting a PhD degree from a top school!

Resume

Education

Bachelor's of Science in Robotics Engineering

Fall 2018 - Winter 2022

University of Michigan - Dearborn

- With Distinction

- Department of Electrical and Computer Engineering

- Experential Honors Program

- Intelligent Systems Club

- Formula SAE

Publications

- “Protecting Voice-Controlled Devices against LASER Injection Attacks”, IEEE Workshop on Information Forensics and Security (WIFS), Dec 2023 – Accepted and will present at the conference

- “Physical Fingerprinting of Ultrasonic Sensor and Applications to Sensor Security”, IEEE International Conference, Dependability in Sensor, Cloud, and Big Data Systems and Applications, Dec 2020

- “A Survey on State-of-the-Art Internet of Vehicles Security Techniques”, IJEER, 2021– Accepted

- "A Survey on State-of-the-Art Autonomous Vehicle Architecture, V2X Wireless Communication Networks, and Future Directions with 5G Evolution", SAE International Journal of Connected and Automated Vehicles, 2021 – Under review

- “Liability for the Damage Caused by Autonomous Vehicles”, IJCSN, 2021 – Under review

Technical and Professional Skills

- Matlab

- Simulink

- C & C++

- Arduino

- Raspberry Pi

- Machine Learning models

- AutoCAD

- CAN

- Python

- Google AIY Voice Hat

- Assembly Language

- Visual Studios

- ISO 26262

Tehcnical Projects

I am really passionate about working on projects and solving real world problems. Below are some of the projects I have worked upon. Please feel free to reach out to me if you want to know more about any of my projects!

Professional Experience

Magna Electronics

June 2022 - Present

Systems Engineer

- Contribute in development of self-driving Advanced Driver Assistance Systems (ADAS) features for an Electric Car

- Assist in building CANoe configuration for the ADAS ECU, Front Camera Module ECU, and Radars

- Make sure that all the ECUs satisfy the systems requirements

- Performed of vehicle level test cases for 300+ hours

- Leading a team of 5 engineers on onsite customer location for 4 months

- Assisted and supported in the launch of first Fisker Ocean in USA

- Debugged and resolved ADAS related issues on customer cars

- Calibrated cameras and radars on 100+ vehicles

- Perform features testing and made sure everything is working properly before delivering the cars to the customers

- Visited the first 10 VIP customers and demonstrated the working of the autonomous features of the cars

- Work with the Software, Features, and Application teams to obtain even better results with the autonomous features

- Assist building Autonomous Parking features for Rivian and Fisker vehicles

- Perform system level testing on the Ultrasonic Sensors

- Work with the software team to configure the sensors in order to get optimal results

- Collect lab and in-vehicle data and analyze it

- Configure the radar modules to obtain the desired SNR values

Magna Electronics

May 2021 - Aug 2021

Jan 2022 - Apr 2022

Intern – Functional Safety and Cybersecurity

- Worked with Vultara software to understand the threat models in a system of components in a car for Fisker

- Worked towards understanding the working of the ADAS modules including front camera, surround view camera, and radar in accordance with the ISO 26262 standard

- Learned the basics of cryptography and how different types of cryptography protect the data and communication

- Learned how private keys and public keys are used to secure the ECU-module communication

- Documented the lessons learned from past Toyota project to ensure all the steps are clear and followed correctly

National Science Foundation (NSF-REU)

May 2020 - Present

Research Experience for Undergraduates

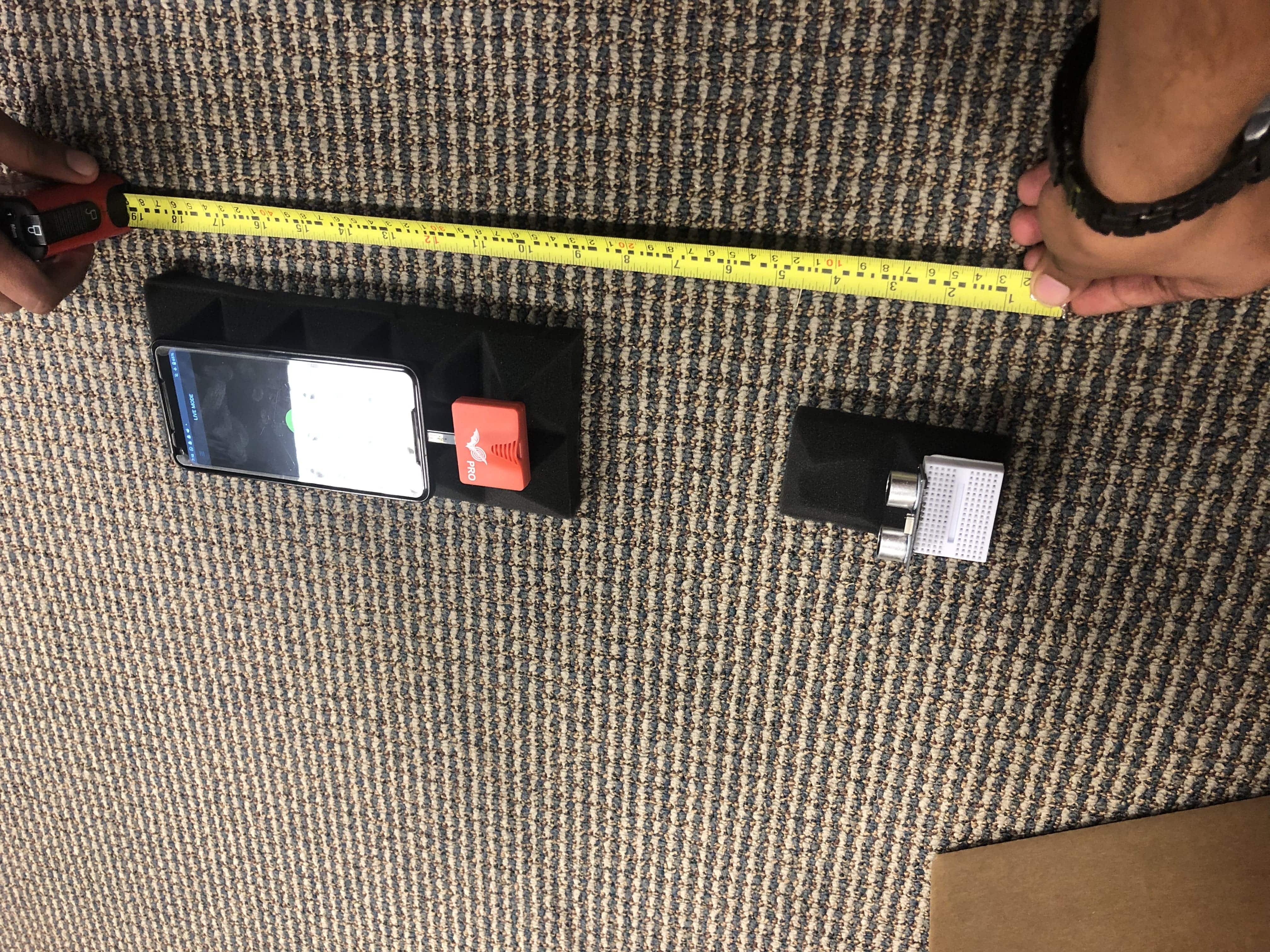

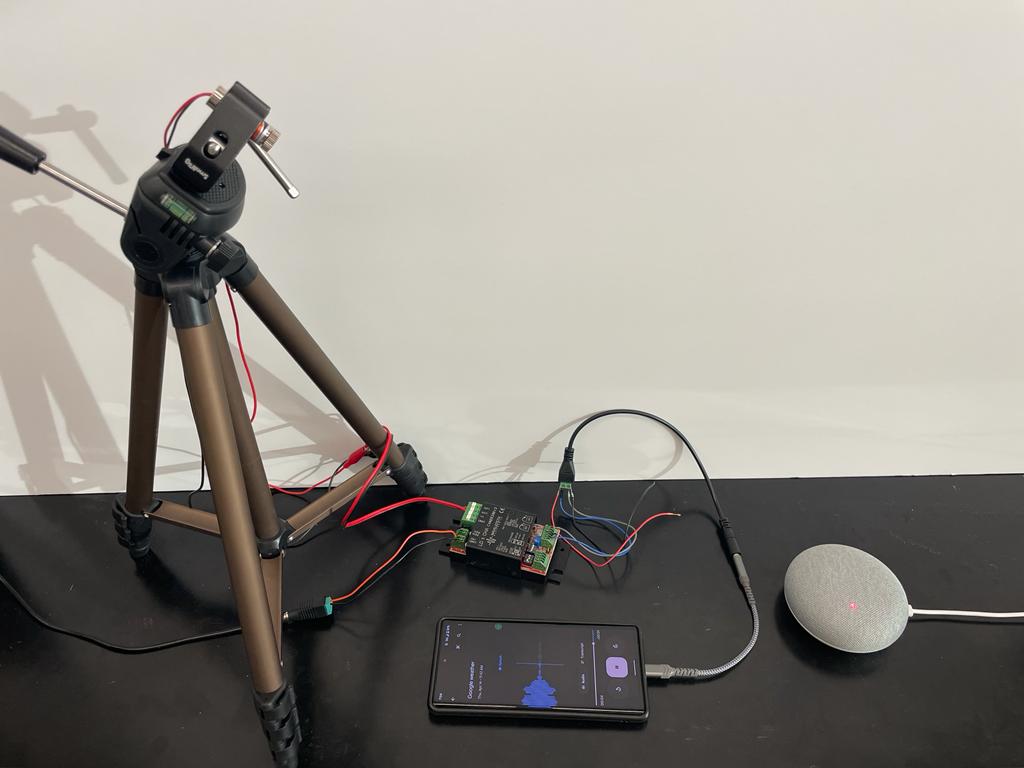

- Constructed setup that includes 3 components (audio amplifier, laser current driver, and laser diode), modulating signals into a laser beam that fires into smart devices’ microphones

- Audio injected laser attacks on Google Home Mini & Alexa–Echo Dot which were cross checked using oscilloscope

- Build the Google AIY Voice Hat using raspberry pi and collected the data for wavelet decomposition

University of Michigan – CECS Researcher

May 2019 - Present

Research Assistant

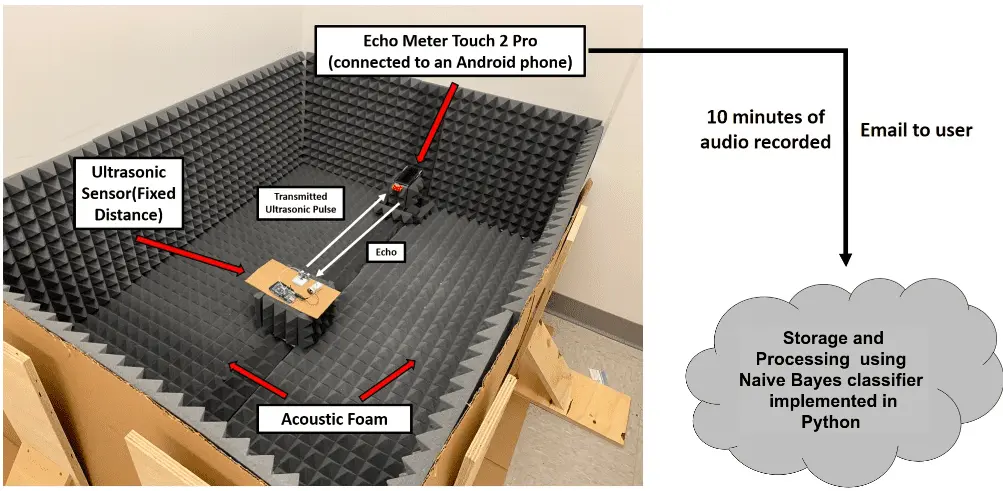

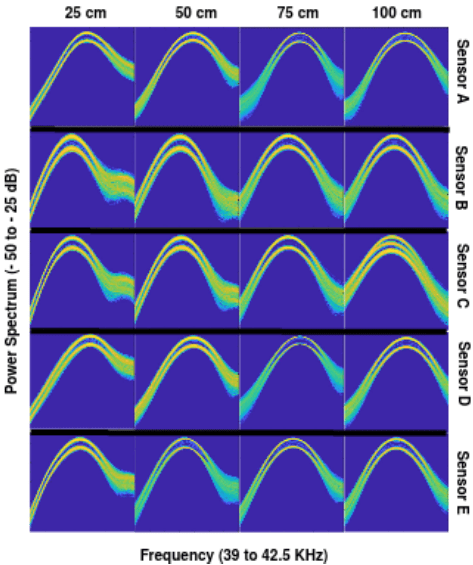

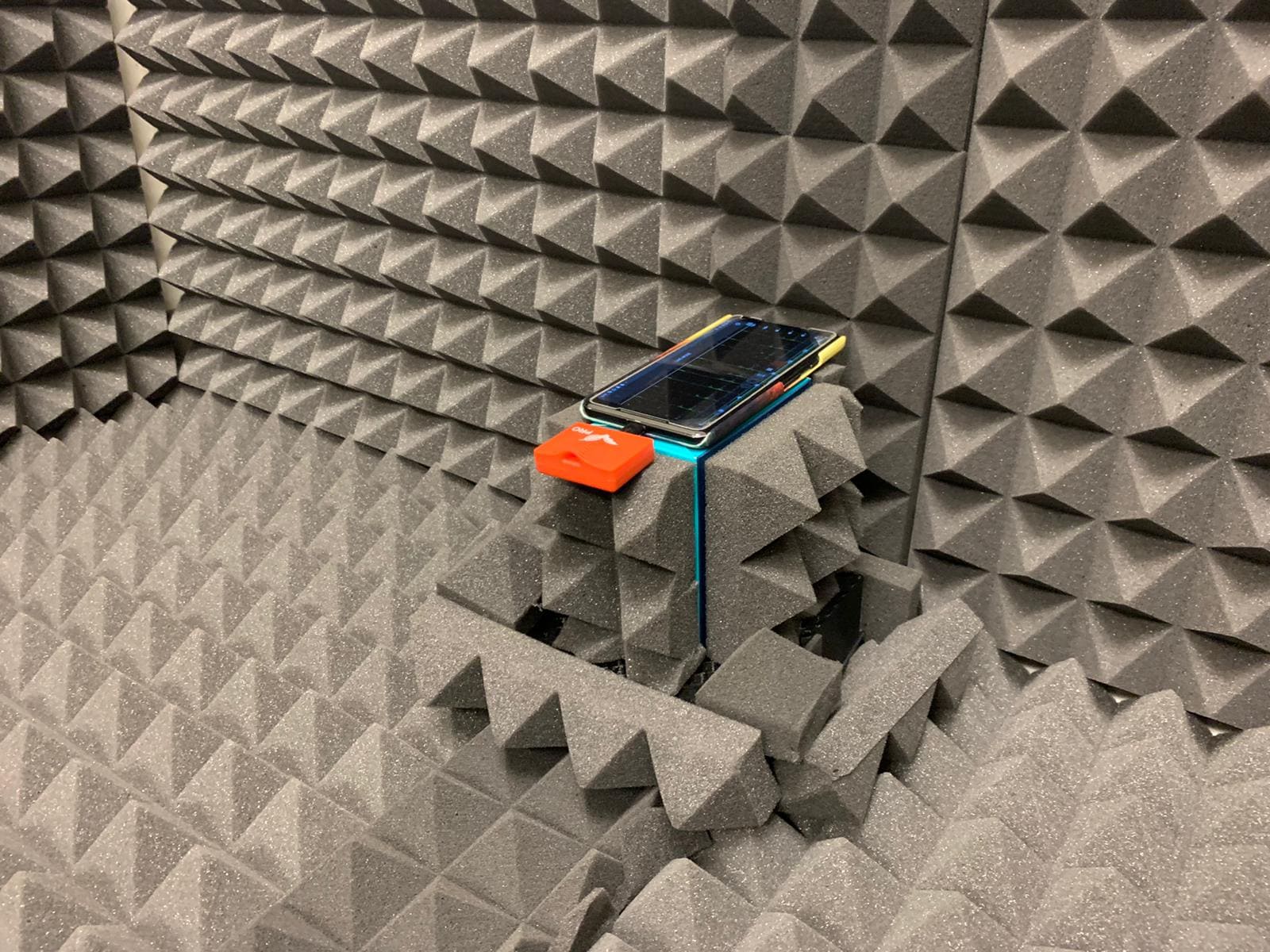

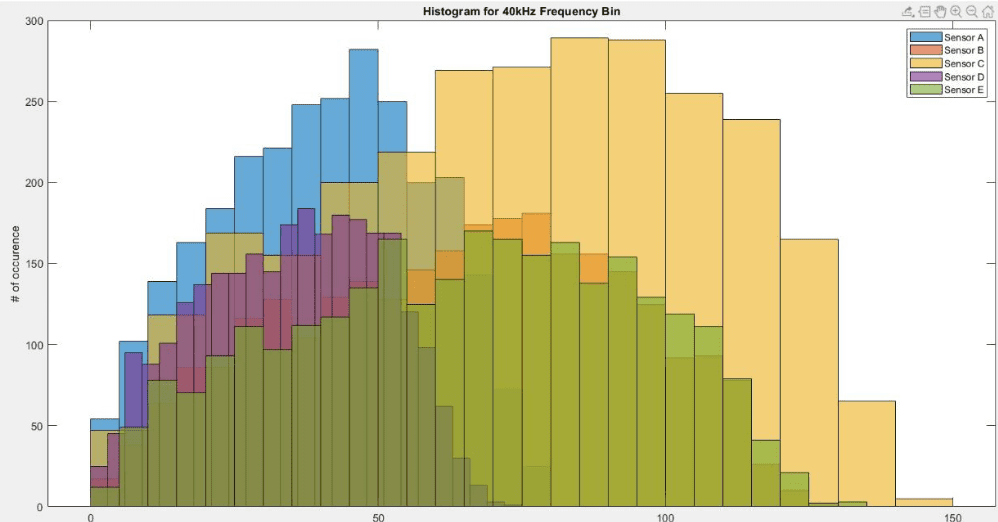

- Executed physical fingerprinting of ultrasonic sensors of a Ford Fusion using Gaussian Naïve Bayes Classification

- Collected data from the sensors in a controlled environment and perform tests using Machine Learning

- Developed a unique algorithm to protect the data from any foreign attack which checks the authenticity of the signal

Download Resume - click here

Publications

- H. Ali, D. Khuttan, R. Refat, and H. Malik, “Protecting Voice-Controlled Devices against LASER Injection Attacks”, IEEE Workshop on Information Forensics and Security (WIFS), Dec 2023 – Accepted and will present at the conference

- E. Cheek, D. Khuttan, R. Changalvala and H. Malik, "Physical Fingerprinting of Ultrasonic Sensors and Applications to Sensor Security," 2020 IEEE 6th International Conference on Dependability in Sensor, Cloud and Big Data Systems and Application (DependSys), 2020, pp. 65-72, doi: 10.1109/DependSys51298.2020.00018.

Research Projects

Physical Fingerprinting of Ultrasonic Sensors and Applications to Sensor Security

NSF Award As the market for autonomous vehicles advances, a need for robust safety protocols also increases. Autonomous vehicles rely on sensors to understand their operating environment. Active sensors such as camera, LiDAR, ultrasonic, and radar are vulnerable to physical channel attacks. One way to counter these attacks is to pattern match the sensor data with its own unique physical distortions, commonly referred to as a fingerprint. This fingerprint exists because of how the sensor was manufactured, and it can be used to determine the transmitting sensor from the received waveform. In this paper, using an ultrasonic sensor, we establish that there exists a specific distortion profile in the transmitted waveform called physical fingerprint that can be attributed to their intrinsic characteristics. We propose a joint time-frequency analysis-based framework for ultrasonic sensor fingerprint extraction and use it as a feature to train a Naive Bayes classifier. The trained model is used for transmitter identification from the received physical waveform.Protecting Voice-Controlled Devices against LASER Injection Attacks

As the popularity of smart assistant devices is increasing in the market, the threats to these devices are going up too. People tend to buy and connect all the smart devices around their houses so that everything is convinient to operate. For example, you can connect your garage door to the Google Assistant or Alexa and just give voice commands to your Google Home or Amazon Echo dot to open and close the garage door. In a paper from the University of Michigan research group, they were able to give commands to these smart assistant devices through injecting voice signals into a laser and firing this laser to the MEMS microphone of the mentioned devices.

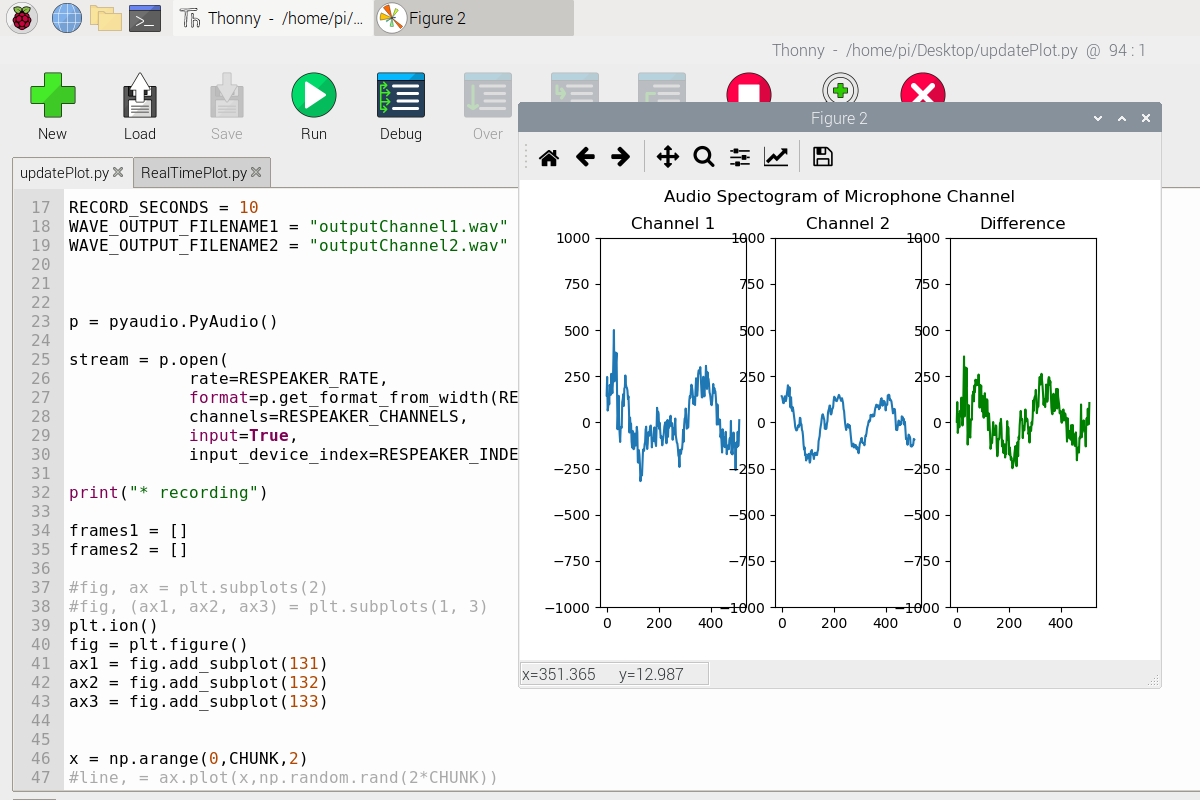

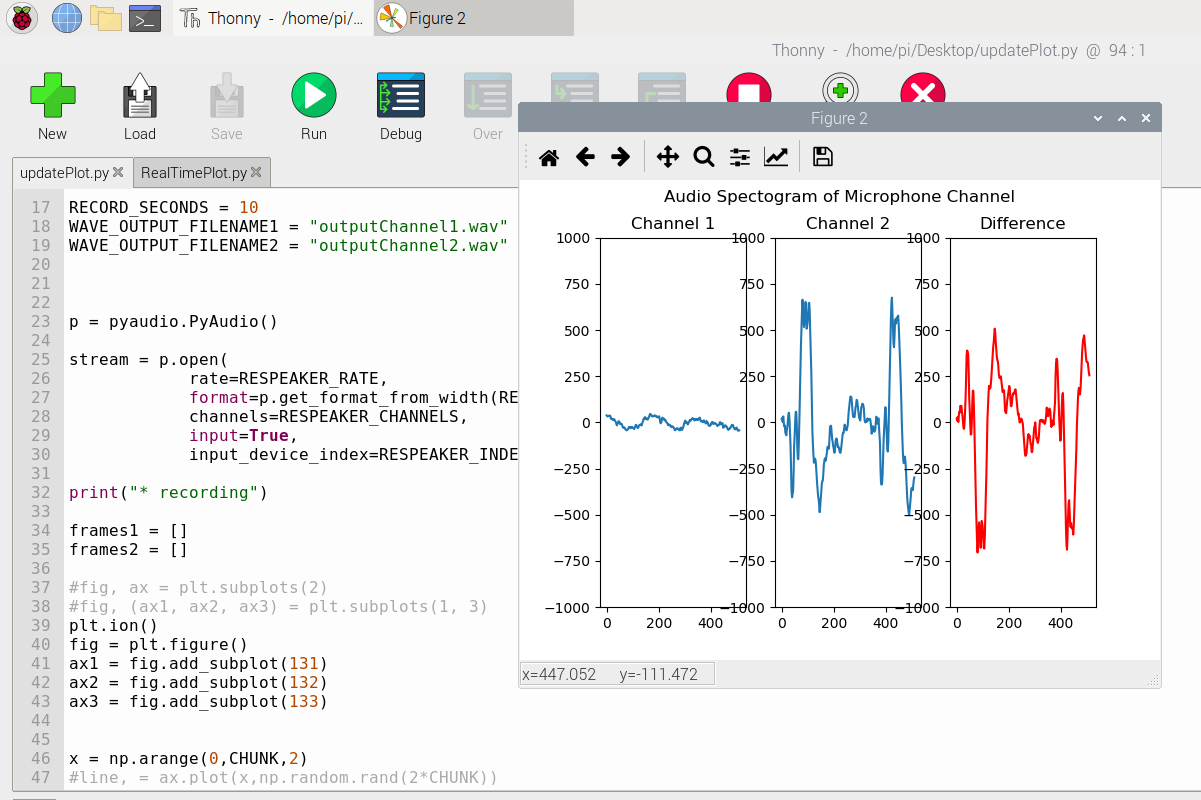

In our research, we managed defend this injection attack to the smart devices by introducing a system which can detect the attack. These smart assistant devices are observed to have atleaset 2 MEMS microphone. Using raspberry pi and Google AIY Voice Hat kit, we were able to build a replica of a Google Home. This helped us get access to the individual channels for the microphones. We collected data through each channel and introduced a real time detection system which give out the difference between the signals gathered by each channel. The reasoning behind this is that if you are talking normally to the assistant, every microphone will have almost similar signals whereas if there is a laser attack, the signal in that particalular channel will be way different form the others, thus a laser attack is detected.

Hobby Projects

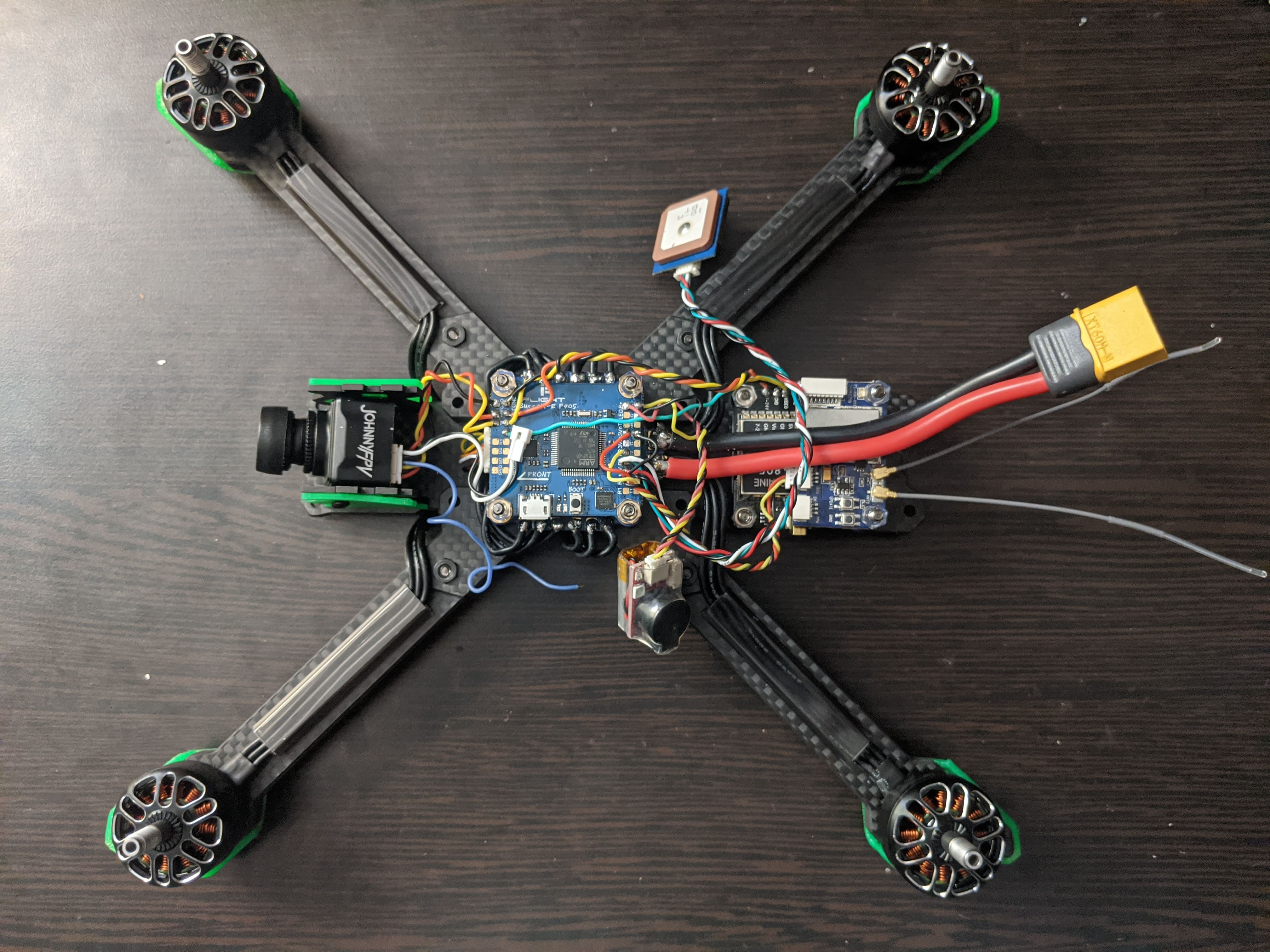

FPV Quadcopter Drone

A drone can be used in a lot of important fields around the world. To list some of the growing fields in the industry:

- Security

- Surveillance

- Infrastructure Inspection

- Rescue missions

- Product delivery

Analyzing these fields, I came up with an idea of building an FPV drone. Here is a list of some unique ideas I considered for the quadcopter

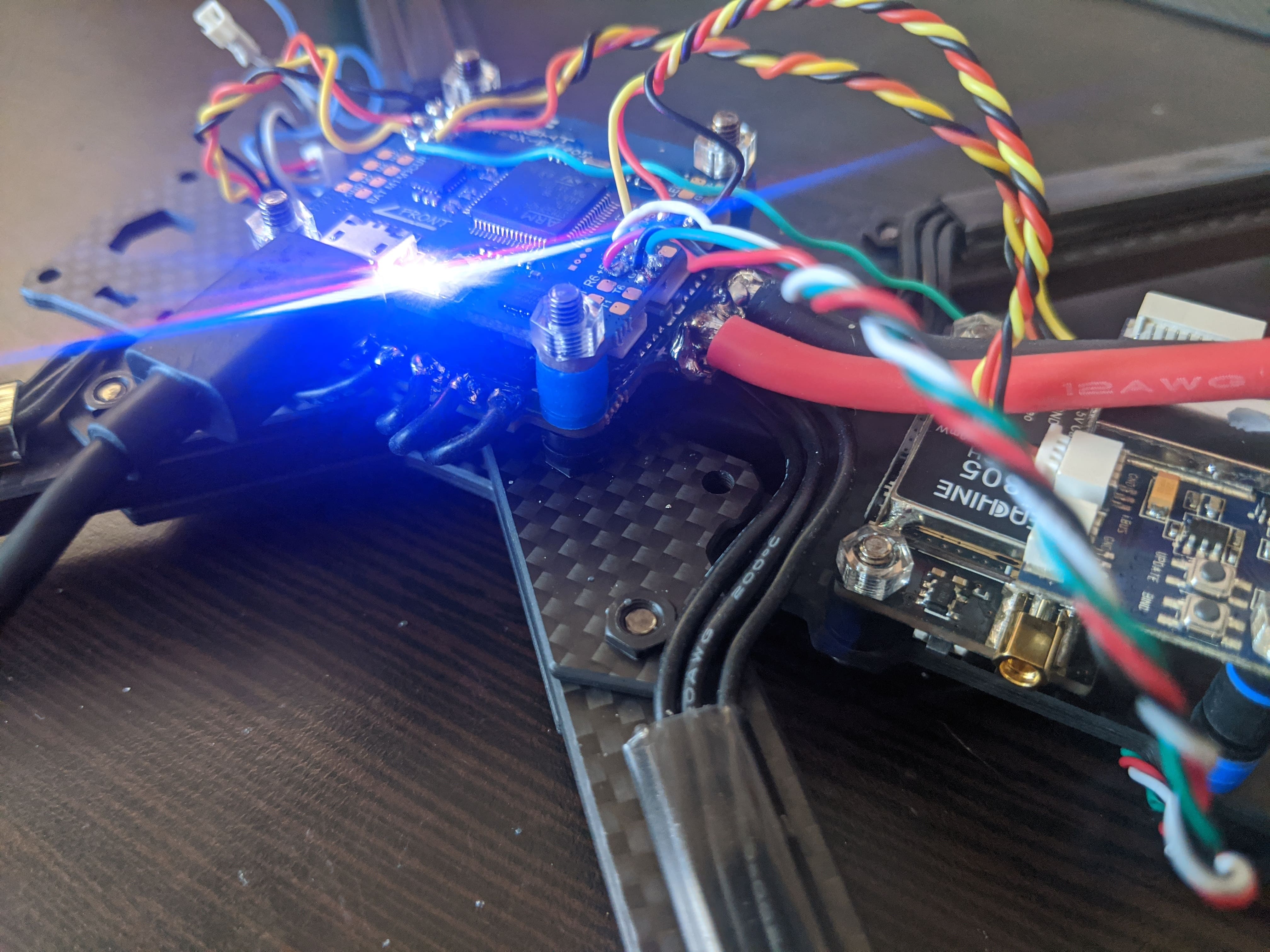

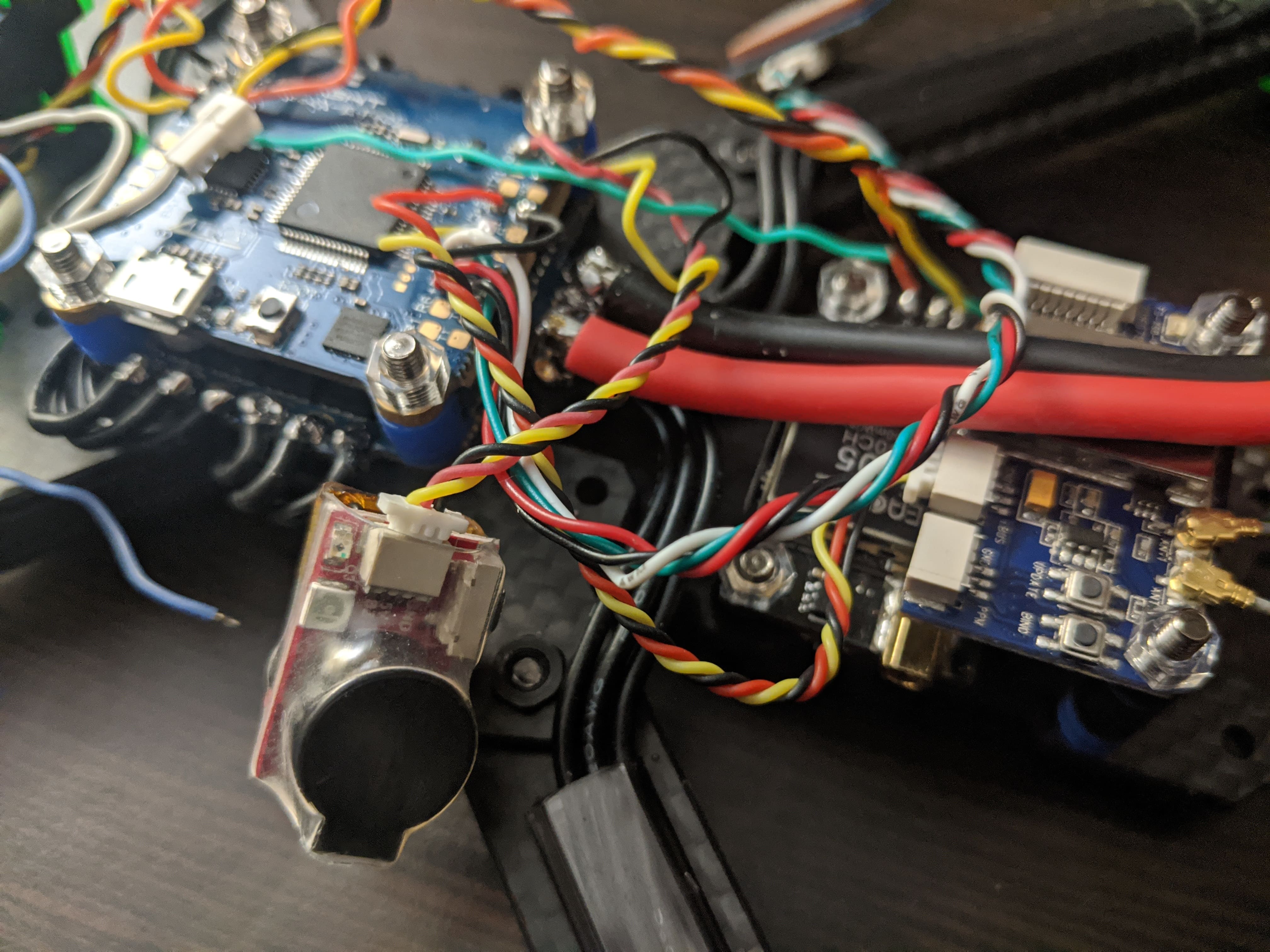

- Taking advantage of the rise in the Virtual Reality (VR) technology, I decided to give an extra edge tech to my quadcopter. I programmed and calibrated a FPV camera to the drone, for which the live video feed can be seen in the FPV goggles worn by the pilot. This helps the pilot to get a clear picture of what the drone is seeing and helps the pilot to maneuver through the obstacles even better giving the quadcopter even better accessibility to the crowded places

- Connected and programmed a GPS module to the flight controller to track the location of the drone in case it loses the connection with the transmitter

- Used a 5" arm carbon fiber frame for durability

- Brushless motors with high torque for better thrust; thus more power to the drone

- Soldered all 4 motors to a 4in1 Electronic Speed Control (ESC) module for weight reduction and more space on the frame

- Dedicated transmitter for first person video view of the camera and receiver for the signals from the controller for flight instructions

- Used BetaFlight software to calibrate and configure all the parameters which like yaw, roll, pitch angles for a smoother time of flight for the drone

My future plan is to add autonomous features to this quadcopter:

- Human Tracking: Use computer vision to detect objects from a certain height and be able to track the pilot (human)

- Package Delivery: Be able to give coordinates to the drone so that it can autonomously deliver light weight packages to place of those coordinates

School Projects

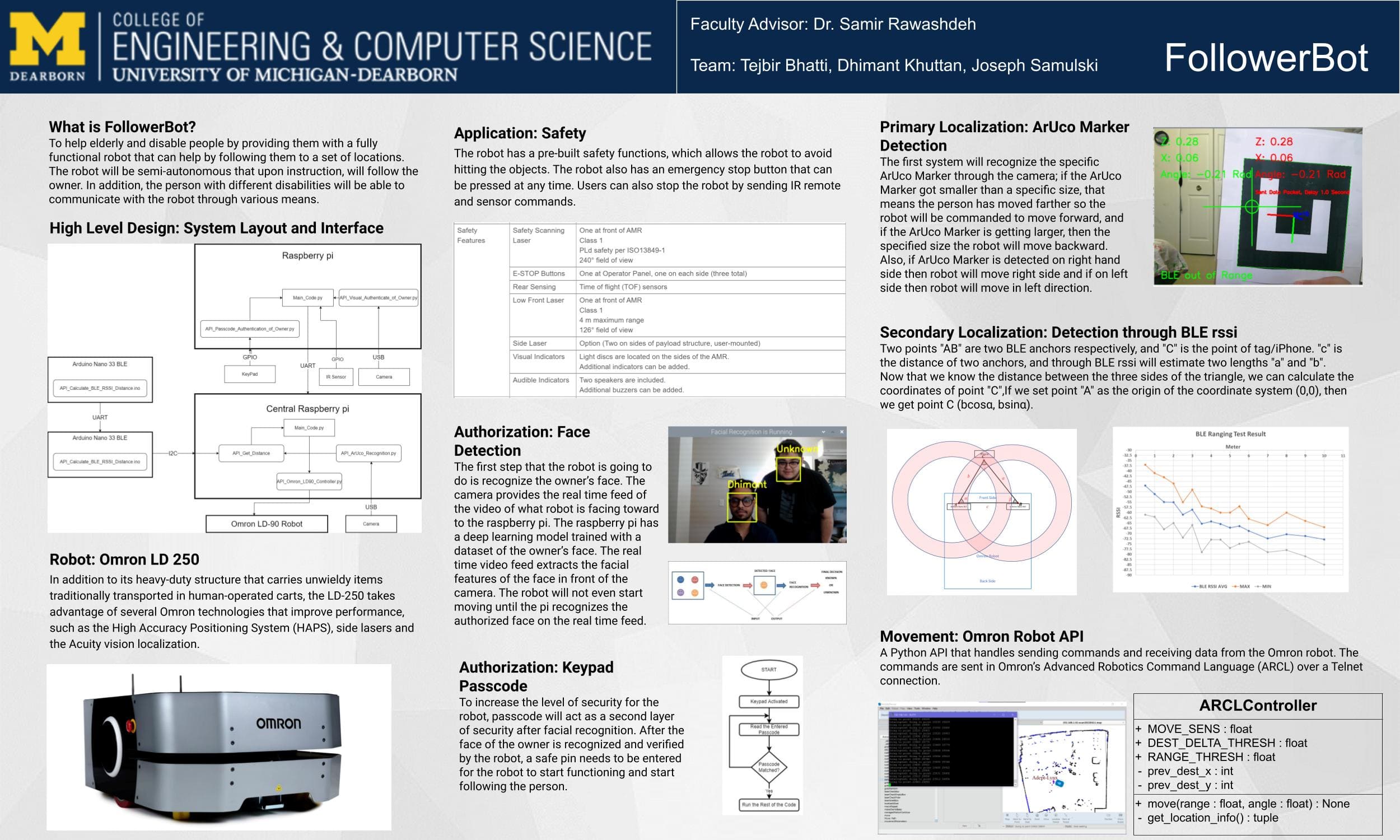

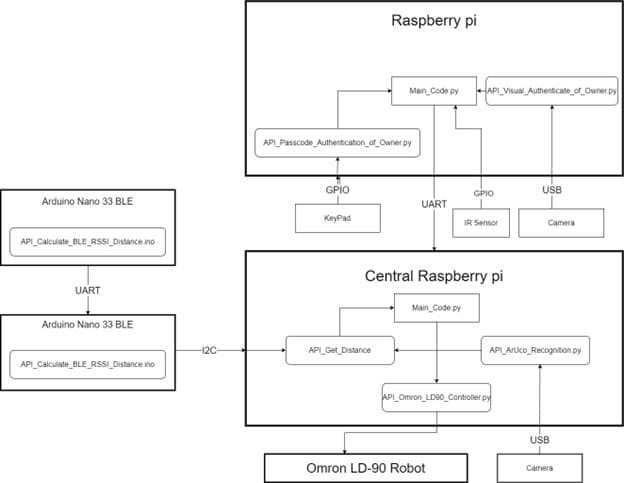

Capstone Project: Follower Bot

To help elderly and disable people by providing them with a fully functional robot that can help by following them to a set of locations. The robot will be semi-autonomous that upon instruction, will follow the owner. In addition, the person with different disabilities will be able to communicate with the robot through various means

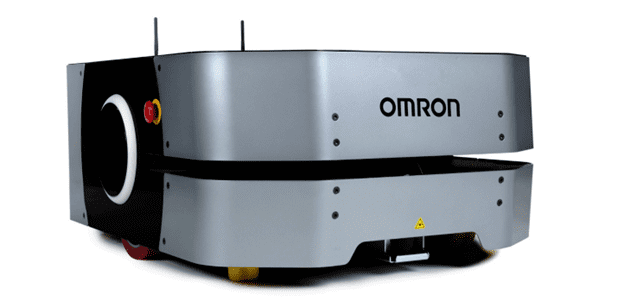

Robot: Omron LD 250

In addition to its heavy-duty structure that carries unwieldy items traditionally transported in human-operated carts, the LD-250 takes advantage of several Omron technologies that improve performance, such as the High Accuracy Positioning System (HAPS), side lasers and the Acuity vision localization

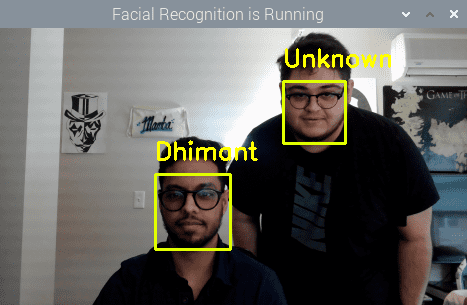

Authorization: Facial Recognition

The first step that the robot is going to do is recognize the owner’s face. The camera provides the real time feed of the video of what robot is facing toward to the raspberry pi. The raspberry pi has a deep learning mode trained with a dataset of the owner’s face. The real time video feed extracts the facial features of the face in front of the camera. The robot will not even start moving until the pi recognizes the authorized face on the real time feed

Authorization: Keypad Passcode

To increase the level of security for the robot, passcode will act as a second layer of security after facial recognition. After the face of the owner is recognized and verified by the robot, a safe pin needs to be entered for the robot to start functioning and start following the person

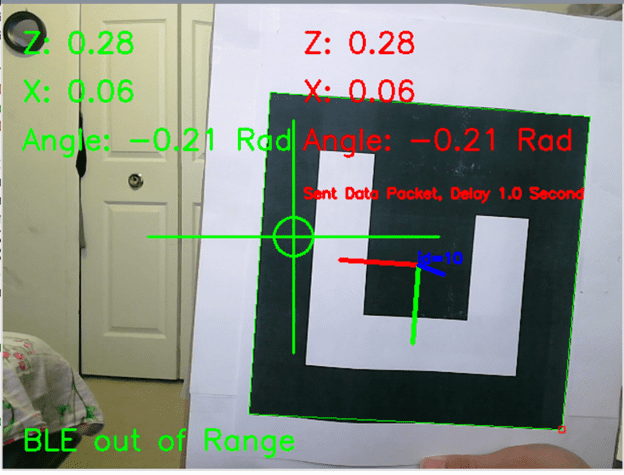

Primary Localization: ArUco Marker Detection

The first system will recognize the specific ArUco Marker through the camera; if the ArUco Marker got smaller than a specific size, that means the person has moved farther so the robot will be commanded to move forward, and if the ArUco Marker is getting larger, then the specified size the robot will move backward. Also, if ArUco Marker is detected on right hand side then robot will move right side and if on left side then robot will move in left direction

Secondary Localization: Detection through BLE rssi

Two points "AB" are two BLE anchors respectively, and "C" is the point of tag/iPhone. "c" is the distance of two anchors, and through BLE rssi will estimate two lengths "a" and "b". Now that we know the distance between the three sides of the triangle, we can calculate the coordinates of point "C",If we set point "A" as the origin of the coordinate system (0,0), then we get point C (bcosα, bsinα)

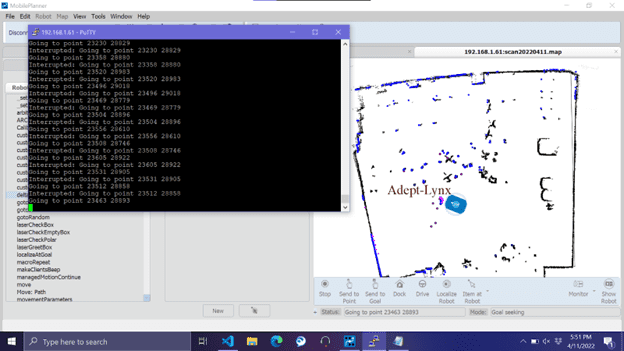

Movement: Omron Robot API

A Python API that handles sending commands and receiving data from the Omron robot. The commands are sent in Omron’s Advanced Robotics Command Language (ARCL) over a Telnet connection

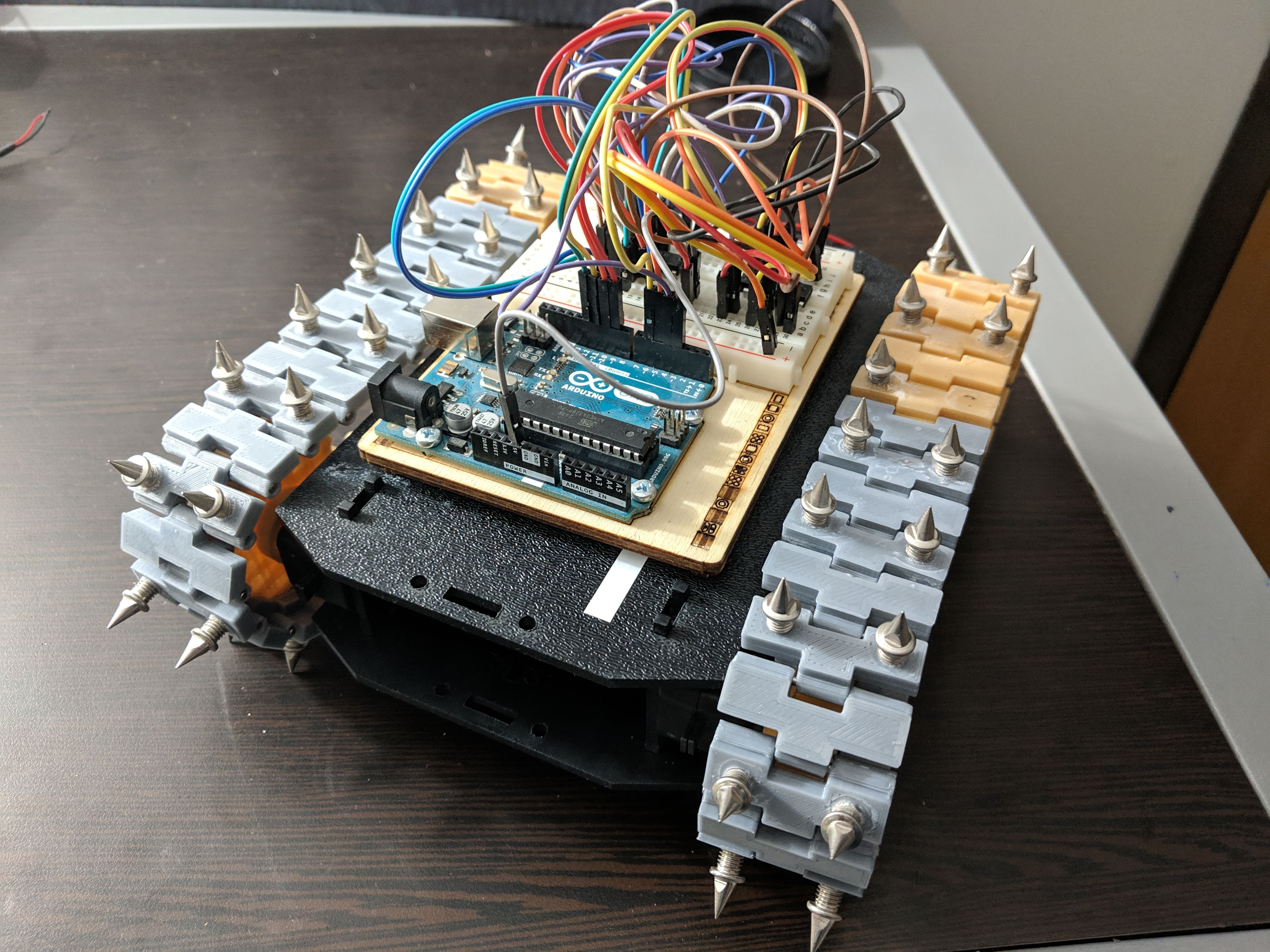

Mars Rover

As an engineering project during my freshman year, we were asked to build a Mars Rover as a team and compete with the other teams’ rovers. The idea was to test our rovers on a huge test bed designed as a replica of Mars’ terrain. Described below is the competition setting:

We built an Arduino based Mars Rover as a means to design and test wheels that achieve traction on a simulated Mars surface. The vehicle was placed on multiple test beds switched on and with the back wheels touching the wall. Each rover was then timed until it touches the opposite wall with the front wheels. A successful solution was the fastest time and the least amount of wheel damage. Bonus points were awarded for any design that can also climb the most challenging test bed terrain. Each team receive the same chassis

My team's rover was amongst the fastest of over 30 teams and was selected to be presented at the Detroit Maker Faire 2019

Contact

If you want to know more about any of my projects or experience please feel free to reach out to me! :)